I am Simon, Han YANG! Currently I am a Ph.D. Candidate majored in Computer and Information Engineering(CIE) at Medical Micro Robotics Lab(MMRL), The Chinese University of Hong Kong, Shenzhen(CUHKsz) supervised by Prof. Zhuoran Zhang and Prof. Yu Sun.

Before that, I received my B.S. degree from Hong Kong Baptist University and worked as a research assistant at Key Laboratory for Artificial Intelligence and Multi-Modal Data Processing of Department of Education of Guangdong Province in Beijing Normal-Hong Kong Baptist University(BNBU) working with Prof. Amy Hui Zhang until 2023.

My research interests include robotics micromanipulation system, 3D Visual Perception, Reinforcement Learning, Embodied Intelligence for Robot.

You can email me at hayang12 [at] link [dot] cuhk [dot] edu [dot] cn.

News

Publications

Fine-Grained Classification for Depth Estimation from Monocular Microscopy for Robotic Micromanipulation of Motile Cells

Manipulation of motile cells is crucial for biological research and clinical applications. However, obtaining Z-axis visual feedback under monocular microscopy remains a challenge for robotic micromanipulation. This paper addresses these limitations by reformulating depth estimation as a fine-grained multi-class depth classification problem that exploits the shallow depth-of-field characteristic of microscopy.

Fully On-Smartphone: Efficient Speed-Up of Sperm Tracking Algorithm for Point-of-Care Semen Analysis

To develop a low-cost, accurate, and fully-on-smartphone system for point-of-care semen analysis and to address the computational bottlenecks in dense sperm tracking on resource-constrained devices. Methods: A portable optical attachment enabling microscopic video acquisition through consumer-grade smartphone cameras was developed.

Ape Optimizer: A P-Power Adaptive Filter-Based Approach for Deep Learning Optimization

Inspired by the least mean p-power (LMP) algorithm from the field of adaptive filtering, we propose a novel optimizer called Ape for deep learning. Ape integrates a p-power adjustment mechanism to compress large gradients and amplify small ones, mitigating the impact of heavy-tailed gradient distributions. It also employs an approach for estimating second moments tailored to α-stable distributions.

A method for estimating the depth information of motile cells and a robotic micromanipulation device [CN202411923420.7]

The present invention relates to the fields of robot technology and image processing technology, specifically referring to a method for estimating the depth information of motile cells and a robot micromanipulation device.

Weakly-Supervised Depth Completion during Robotic Micromanipulation from a Monocular Microscopic Image

This paper aims to address the challenge of acquiring dense depth information during robotic cell micromanipulation. A weakly-supervised depth completion network is proposed to take cell images and sparse depth data obtained by contact detection as input to generate a dense depth map.

Depth Estimation for Motile Cell under Monocular Microscopy Based on Fine-grained Classification

This paper models depth estimation as a multi-class classification task and introduces a fine-grained feature extraction block to discern subtle distinctions between focal planes.

HQNet: An Efficient Convolutional Neural Network for Cervical Cancer Classification

In this paper, an efficient deformable convolutional neural network system(HQNet) was constructed to identify Cervical Cancer cells with different degrees of development. A framework for bringing children's drawings of human figures to life.

WTBNeRF: Wind Turbine Blade 3D Reconstruction by Neural Radiance Fields

The method we use, called WTBNeRF is a network dedicated to wind turbine scene rendering and 3D reconstruction of wind turbines.

Experience

Research Assistant

Robotic Micromanipulation System, IVF, Cell Surgery, 3D Visual Perception

3D Reconstruction and Computer Vision.

Research Assistant

Research on Medical Image Processing for Cervical Cancer image classification.

Python Engineer Intern

Python Data Analysis, NLP, Cloud Database.

Posts

Helpful Resources

A growing resource list that might be useful to researchers and graduate students. I plan to update this frequently.

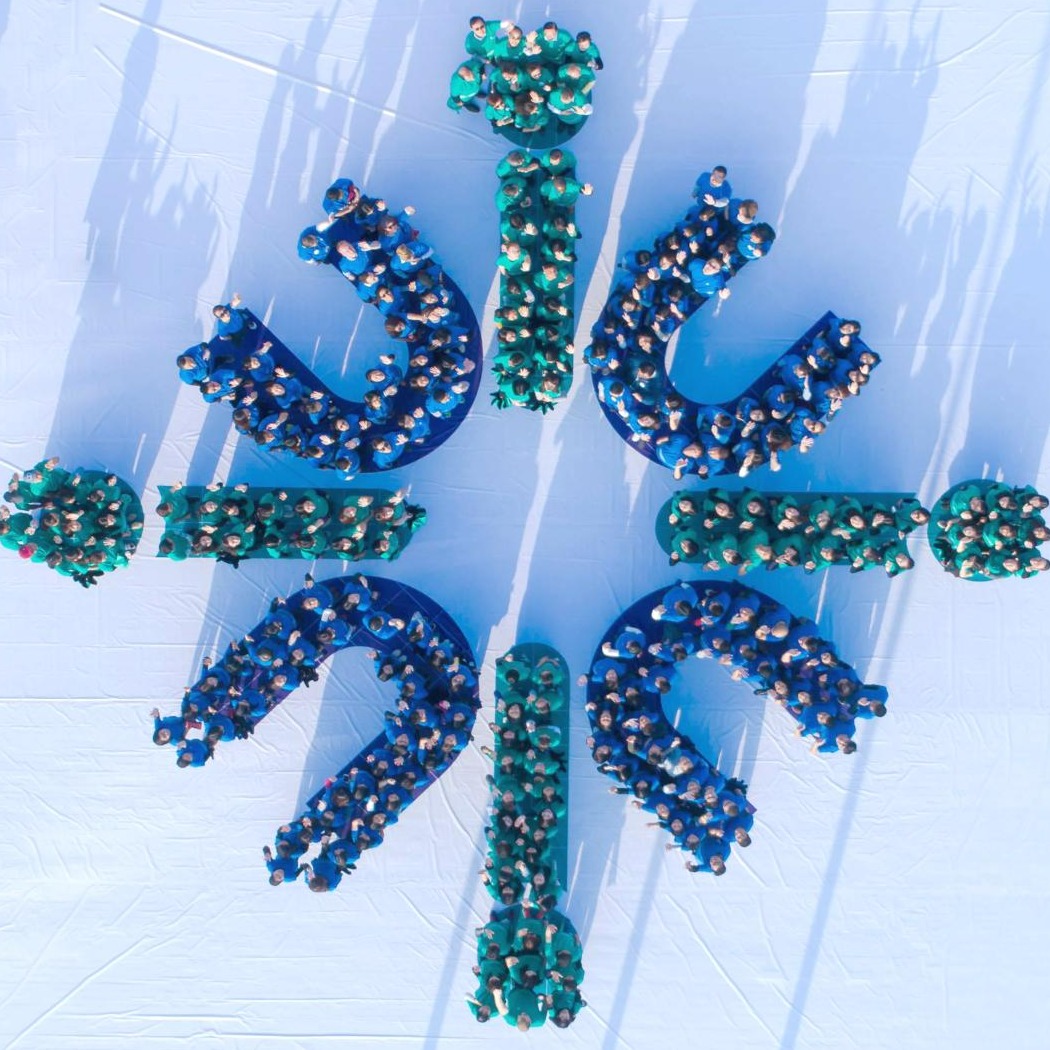

Media Coverage

Contact

The Chinese University of Hong Kong, Shenzhen, 2001 Longxiang Blvd., Longgang District, Shenzhen